Two mathematical tidbits that I came across recently, posted mainly for my own benefit.

1. L1 distance between probability density functions

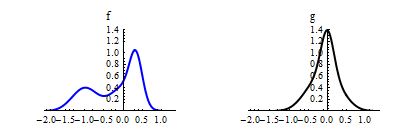

If and

are two random variables with densities

and

, respectively, the density

of the scaled variable

is

and similarly for , where

. Using this transformation rule, we see that the

distance between

and

satisfies

Setting , we get

This latter result also holds for -dimensional vector random variables (for which the transformation rule is

).

In their book on the approach to nonparametric density estimation, Luc Devroye and László Györfi prove a much more general version of this result, which includes as a special case the fact that

distance between PDFs is invariant under continuous, strictly monotonic transformations of the coordinate axes. In the one-dimensional case, this means that one can have a visual idea about the

distance between two PDFs by bringing the real line into a finite interval like

by a monotonic transformation.

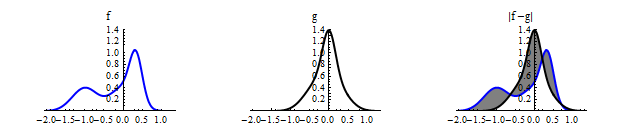

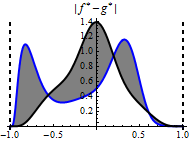

The figure below shows the transformed version of the PDFs above, via the transformations ,

. The area of the gray region inside the interval

below is the same as the area of the gray region in the third plot above (which is spread over the whole real line).

You can read the proof of the general version of the theorem in the introductory chapter of the Devroye-Györfi book, which you can download here. Luc Devroye has many other freely accessible resources on his website, and he offers the following quote as an aid in understanding him.

You can read the proof of the general version of the theorem in the introductory chapter of the Devroye-Györfi book, which you can download here. Luc Devroye has many other freely accessible resources on his website, and he offers the following quote as an aid in understanding him.

2. L1 penalty and sparse regression: A mechanical analogy

Suppose we would like to find a linear fit of the form to a data set

, where

,

, and

. Ordinary least-squares approach to this problem consists of seeking an

-dimensional vector

that minimizes

One sometimes considers regularized versions of this approach by including a “penalty” term for , and minimizing the alternative objective function

where is a tuning parameter, and

is a function that measures the “size” of the vector

. When

,

we have what is called ridge regression, and when

,

we have the so-called LASSO. Clearly, in both cases, increasing the scale of the penalty term results in solutions with “smaller”

, according to the appropriate notion of size.

Perhaps a bit surprisingly, for the case of penalty (LASSO), one often gets solutions where some

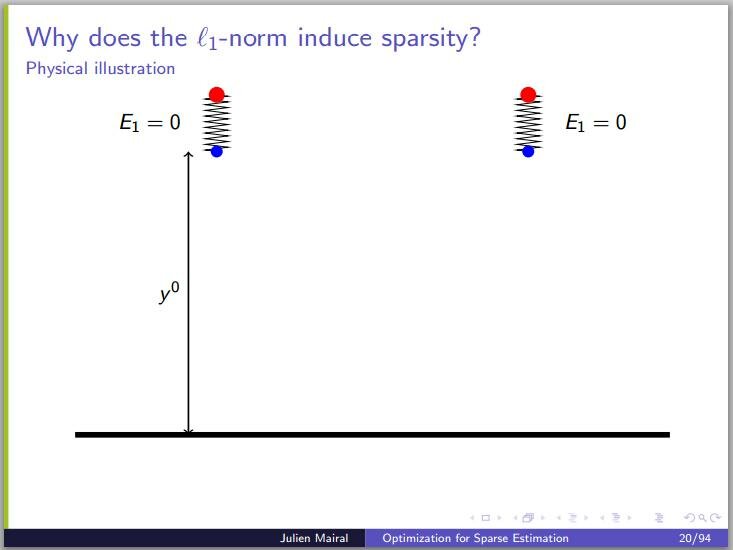

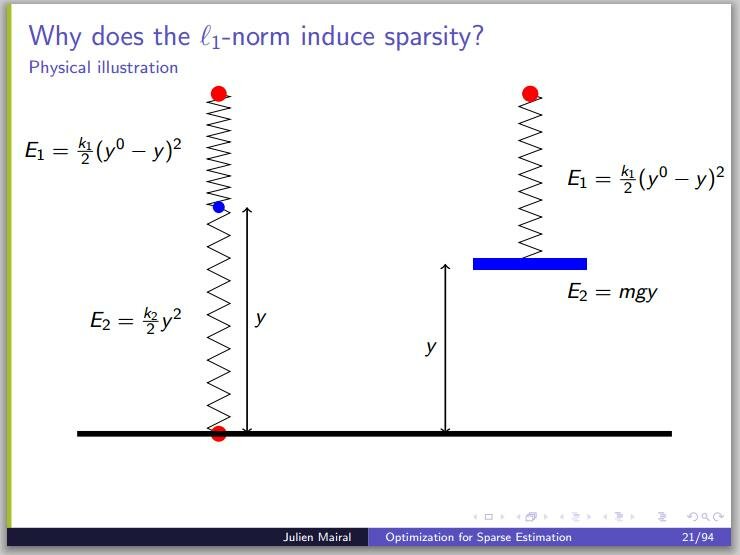

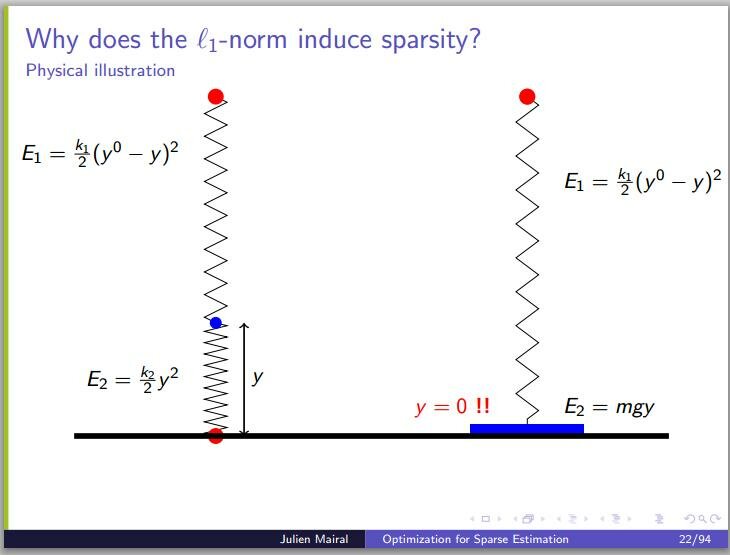

are not just small, but exactly zero. I recently came across an intuitive explanation of this fact based on a mechanical analogy, on a blog devoted to compressed sensing and related topics. The following three slides reproduced from a presentation by Julien Mairal perhaps do not exactly constitute a “proof without words“, but are really helpful nevertheless. The

terms in the figures represent the

and

versions of the penalty function as “spring” and “gravitational” energies, respectively. Increasing the spring constant

makes

smaller, but not zero. Increasing the gravity (or the mass), on the other hand, eventually makes

zero.

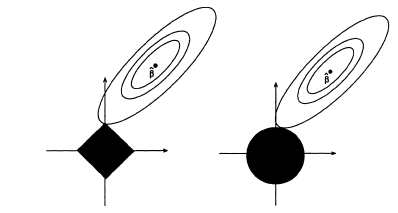

In case you haven’t seen it before, here is a visual from the original LASSO paper by Tibshirani, comparing and

penalties in a two-dimensional problem (

denotes the solution of the ordinary least squares problem, without any constraint or penalty):

This provides another intuitive explanation of the sparsity of solutions obtained from LASSO. In order to make sense of this figure, you should keep in mind that the regularized problems above are equivalent to the constrained problem

where is analogous to the tuning parameter

, and

stands for

or

penalty, as above. (If this was a bit too cryptic, you can take look at the original LASSO paper, which, according to Google Scholar, has been cited more than 9000 times.)